We did the initial project setup on containers in the previous "Demystifying Docker" series article. In this one, we will cover the database with persistence (examples with MS SQL Server, PostgreSQL, MySQL, MariaDB, Mongo) part. Still, first, we need to clarify a couple of things regarding the concept of the critical services (such as the database) running in containers.

Why NOT database in Docker?

TL;DR: Carefully consider using it in the production environment if your database is a critical service.

Docker was not created with persistence in mind, and one of the most appealing features of containers is their ability to be started and terminated at will. The data in the container is transient, and it is erased with the container.

Be careful using database services in containers in a PRODUCTION environment! If your database is not a critical service (which is rare), maybe you could try it in Docker; otherwise, you are entering a dangerous zone of having a headache in the (probably near) future.

If you are deploying on a cloud, the best option is to use cloud database services (AWS Relational Database Service (RDS), Azure Databases, Google Cloud Databases, Managed Databases on Digital Ocean, or an equivalent hosted database service of your cloud provider). This will simplify many management tasks such as updating minor versions, handling regular backups and even scaling up.

Why YES database in Docker?

TL;DR: For any environment (production with particular caution).

Let us suppose that we have a couple of different database engines in our projects, or have different versions of any (or all) of them, or your colleague has another operating system or with a different setup, or you need a quick start on the new developer joining the team, we can mention a lot more, but I am sure you get the point. You don't want to overload your working machine with different installations and versions when you can do it in one place easily through the docker CLI commands or the docker-compose specification. You can add or remove it without impacting the rest of the system.

As previously mentioned, containers don't exist with persistence in mind, but we usually want to have data even if we restart our container engine or working machine. Luckily, there are a couple of ways to preserve the data mentioned below.

Data persistence

There are two ways of Docker handling the persistence in general - volumes and bind mounts. Both allow you to mount a location on the host machine to a place in the container. This provides storage for the data even if the container is shut down, and there is no need to worry about the data being lost.

Bind mounts

Bind mount is not my preferred way of persisting data, but it still has its usage, so I will briefly run through the idea with PostgreSQL as an example. Bind mounts will mount a file or directory to the container from the host machine, and then it can be referenced via its absolute path. It relies on the host machine's filesystem having a specific directory structure. If not, it is necessary to explicitly create a path to the file or folder to place the storage. Additionally, bind mounts give us access to sensitive files, and we can change the host filesystem through processes running in a container, which is considered a security implication.

Usage

You can use the flags --mount and -v to use bind mounts on a container. The most noticeable difference between the two options is that --mount is more explicit and verbose, whereas -v is more of a shortcut for --mount. It combines all of the --mount options into a single field. To learn more, always reference the official documentation.

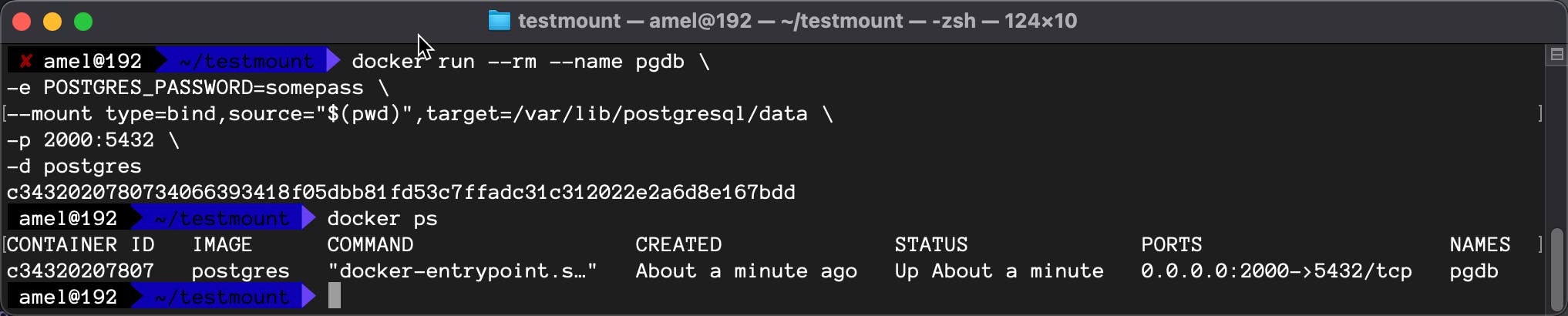

docker run --rm --name pgdb -e POSTGRES_PASSWORD=somepass --mount type=bind,source="$(pwd)",target=/var/lib/postgresql/data -p 2000:5432 -d postgres

Commands explanation:

docker runcreates a writeable container layer over the specified image and then starts it using the selected command--rmwill automatically remove the container when it exits--name pgdbassigns container name pgdb-e POSTGRES_PASSWORD=somepasssets the required environment variable to use the PostgreSQL image, and it must not be empty or undefined. This environment variable specifies the superuser password for PostgreSQL.--mount type=bind,source="$(pwd)",target=/var/lib/postgresql/datacreates thetype=bindmount between the current directory (pwdreturns a current working directory name) and the default directory for the database files in Docker container/var/lib/postgresql/data-p 2000:5432maps the default PostgreSQL port 5432 to the host port 2000 (later, we will use port 2000 to connect to the database instance from the host machine). You can changeport 2000to some other port if that one is already in use.-dwill run the command in the detached mode (it won't lock our terminal). By design, containers started in detached mode exit when the root process used to run the container exits, unless you specify the --rm option. If you use -d with --rm, the container is removed when it exits or when the daemon exits, whichever happens first.postgresis the base PostgreSQL image

Our PostgreSQL container is running, and now it is accessible from the appropriate database tool (such as DBeaver Community). I am using port 2000 and password somepass to connect to our database instance, as we defined earlier in the Docker CLI command.

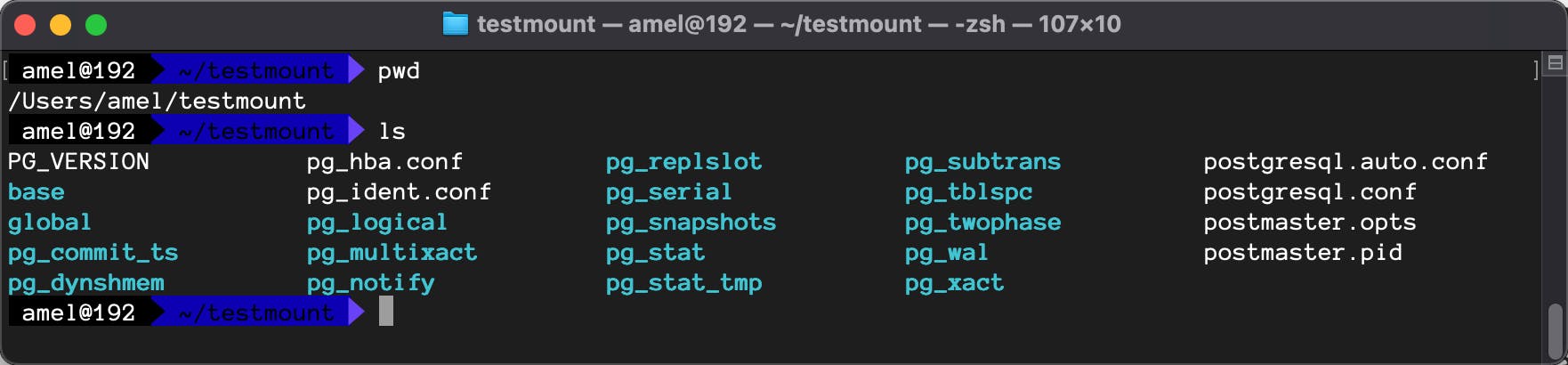

My current working directory is ~/testmount, and running the previous Docker command will create the necessary database files in that folder.

Volume mounts (preferred way)

Docker fully manages docker volumes; therefore, they are unaffected by our directory structure or the host machine's operating system. When we utilize a volume, Docker creates a new directory in the host machine's storage directory, and Docker handles its content. For Docker volumes, storage is not associated with the container's life cycle and resides outside the container.

Some of the advantages:

- kill containers as you need and still retain your data

- attach volumes to multiple containers running at the same time

- reuse storage across multiple containers (for example, one container writes to storage and another reads from storage)

- volumes do not increase the size of the Docker containers that use them

- you can use the Docker CLI to manage your volumes (for example, retrieving a list of volumes or deleting unused volumes).

All commands below follow the same pattern because we use persistence for the container unrelated to which database management system we use. The only things different are environment variables and target directories (because other databases usually use different file locations).

docker run --rm --name <custom-name> \

-e <ENV_VAR_1=some_value_1> \

-e <ENV_VAR_2=some_value_2> \

--mount type=volume,source=<custom-source-name>,target=<database-file-location-in-container> \

-p <host-port>:<container-database-port> \

-d <docker-image-with-or-without-tag>

Commands explanation:

docker runcreates a writeable container layer over the specified image and then starts it using the selected command--rmwill automatically remove the container when it exits--name custom-nameassigns container name custom-name-e ENV_VAR_1=some_value_1 -e ENV_VAR_2=some_value_2sets the required environment variables to use for the database image. Different database management systems require different environment variables. You can find those in the documentation for the specific DBMS.--mount type=volume,source=custom-source-name,target=database-file-location-in-containercreates thetype=volumewith name custom-source-name and mounts it to the default directory for the database files in Docker containerdatabase-file-location-in-container-p host-port:container-database-portmaps the default database port (i.e., 5432 for PostgreSQL, 1433 for SQL Server, 3306 for MariaDB and MySQL, etc.). You can change thehost-portto some free port on your host machine.-dwill run the command in the detached mode (it won't lock our terminal). By design, containers started in detached mode exit when the root process used to run the container exits, unless you specify the --rm option. If you use -d with --rm, the container is removed when it exits or when the daemon exits, whichever happens first.docker-image-with-or-without-versionis the base Docker image from the Docker Hub.

SQL Server

docker run --rm --name our-mssql -e "ACCEPT_EULA=Y" -e "SA_PASSWORD=Str0ngP@ssword" -e "MSSQL_PID=Express" --mount type=volume,source=custommssql,target=/var/opt/mssql -p 7000:1433 -d mcr.microsoft.com/mssql/server:2019-latest

Environment variables:

ACCEPT_EULAconfirms your acceptance of the End-User Licensing Agreement.SA_PASSWORDis the database system administrator (userid = 'sa') password used to connect to SQL Server once the container is running. Important note: This password needs to include at least 8 characters of at least three of these four categories: uppercase letters, lowercase letters, numbers, and non-alphanumeric symbols.MSSQL_PIDis the Product ID (PID) or Edition that the container will run with. Acceptable values: Developer (this is the default if no MSSQL_PID environment variable is supplied), Express, Standard, Enterprise, EnterpriseCore.

A complete list of environment variables can be found here.

Connect using information defined above

- Server Name -

127.0.0.1,7000 - Authentication -

SQL Server Authentication - Username -

sa - Password -

Str0ngP@ssword

PostgreSQL

docker run --rm --name our-postgresql -e POSTGRES_PASSWORD=Str0ngP@ssword --mount type=volume,source=custompostgres,target=/var/lib/postgresql/data -p 7001:5432 -d postgres:latest

Environment variables:

POSTGRES_PASSWORD- This environment variable is required for you to use the PostgreSQL image. It must not be empty or undefined. This environment variable sets the superuser password for PostgreSQL. The default superuser is defined by the POSTGRES_USER environment variable (if not present, the default user ispostgres)

Complete list of environment variables can be found here.

Connect using information defined above

- Server Host -

localhost - Port -

7001 - Username -

postgres - Password -

Str0ngP@ssword

MariaDB

docker run --rm --name our-mariadb -e MARIADB_ROOT_PASSWORD=Str0ngP@ssword --mount type=volume,source=custommariadb,target=/var/lib/mysql -p 7002:3306 -d mariadb:latest

Environment variables:

MARIADB_ROOT_PASSWORDspecifies the password that will be set for the MariaDBrootsuperuser account. In the above example, it was set toStr0ngP@sswordpassword.

A complete list of environment variables can be found here.

Connect using information defined above

- Server Host -

localhost - Port -

7002 - Username -

root - Password -

Str0ngP@ssword

MySQL

docker run --rm --name our-mysql -e MYSQL_ROOT_PASSWORD=Str0ngP@ssword --mount type=volume,source=custommysql,target=/var/lib/mysql -p 7003:3306 -d mysql:latest

Environment variables:

MYSQL_ROOT_PASSWORDspecifies the password set for the MySQLrootsuperuser account. In the above example, it was set to theStr0ngP@sswordpassword.

A complete list of environment variables can be found here.

Connect using information defined above

- Server Host -

localhost - Port -

7003 - Username -

root - Password -

Str0ngP@ssword

MongoDB

docker run --rm --name our-mongo -e MONGO_INITDB_ROOT_USERNAME=someuser -e MONGO_INITDB_ROOT_PASSWORD=Str0ngP@ssword --mount type=volume,source=custommongo,target=/data/db -p 7004:27017 -d mongo:latest

Environment variables:

MONGO_INITDB_ROOT_USERNAMEandMONGO_INITDB_ROOT_PASSWORDwill create a new user and set that user's password. This user is created in the admin authentication database and given the root role, asuperuserrole.

Note: This image will also create a volume for /data/configdb, so you will see an additional hex ID volume in your Docker volumes.

Connect using information defined above

- URI -

mongodb://someuser:Str0ngP@ssword@localhost:7004

Volumes

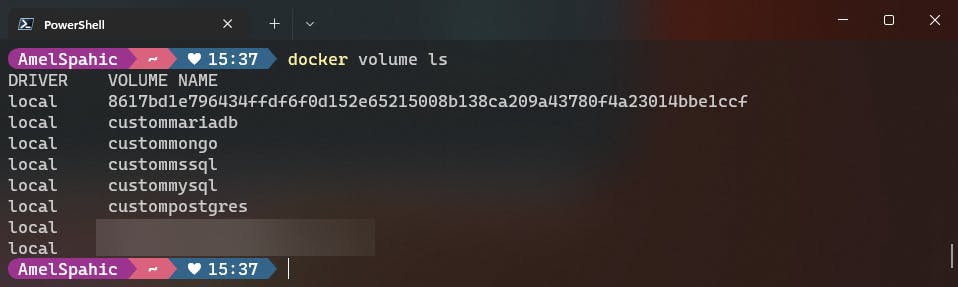

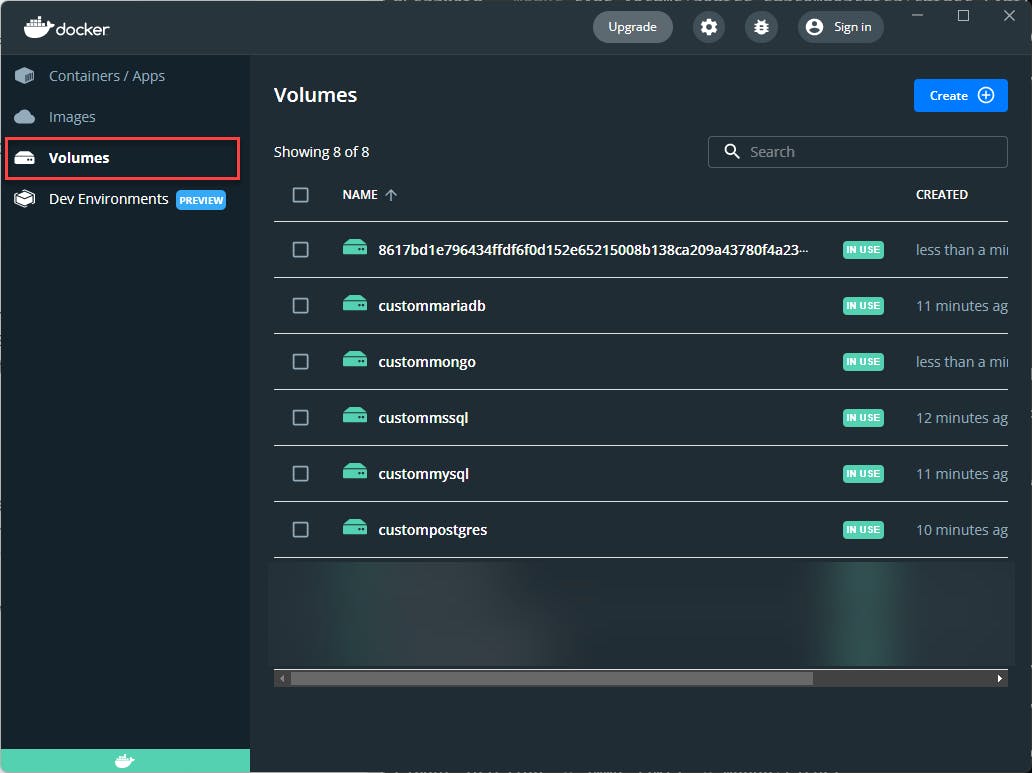

You can check your newly created volume with the command:

docker volume ls

Or you can check your running Docker Desktop instance under the Volumes tab.

Docker Compose

Every previously defined database management system can be run through the docker-compose instead of Docker CLI.

version: '3.4'

services:

db:

image: postgres:latest

restart: always

environment:

- POSTGRES_PASSWORD=Str0ngP@ssword

ports:

- 7000:5432

volumes:

- custommssql:/var/lib/postgresql/data

volumes:

custommssql:

driver: local

Which one to use?

There are a few essential things to consider when determining whether to use volumes or bind mounts. You should utilize volumes if you want your storage or persistent layer to be fully controlled by Docker and accessed only through Docker containers and the Docker CLI.

On the other hand, Bind mounts are the perfect solution for the task if you need complete control of the storage and plan on letting other processes besides Docker access or modify the storage layer.

According to the Docker documentation, using volumes is the most straightforward approach to start persisting data in your Docker container. In general, bind mounts have additional restrictions.

Based on what we've observed thus far, this conclusion is not surprising. If you're ever unsure how to persist data in your Docker containers, using volumes is a wise rule of thumb.

A bind mount is distinguished because it can be accessed and modified by programs other than Docker. This can be advantageous for integrating Docker with other operations, but it can also be inconvenient if security is an issue.

Next Steps

Although we used databases as an example, we described two ways to keep data on Docker containers. The following article will show how to add the database service to the already created application with docker-compose and cover the integration part.